I was enrolled this Parallel Programming course from Maharishi University of Management. Even though I was not very new to Parallel Programming, I learnt a lot of new techniques. I have an exam next week, so I am trying to re-collect all the major topics i have read while writing this blog.

While the concept of Programming began with sequential programming, after the invention of multiple cores and multitasking, the programming is no more sequential. We have to take into consideration lots of other things to make a program work properly in a parallel way to utilize the processors available to the program.

There are three types of Multi-Processor Architecture:

1) Multi-Processor computer organization with common bus.

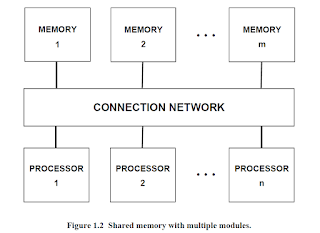

2) Shared Memory with Multiple Modules

3) Multiple Computer Organizations

One thing to note is that, some algorithms which might be best for sequential processing may be in-efficient in the parallel programming, or there may be more efficient algorithm for parallel processing which might be not so efficient in sequential processing. For e.g. We can sort an array much faster with Rank sort in parallel system, but its not so efficient in sequential processing. The best algorithm for sequential sorting is quick sort.

Writing a parallel program, you must be able to think about the different processes, local variables and global variables and the stream variables,

There are different kinds of Parallel Programming

1) Data Parallelism

2) Data Partitioning

3) Relaxed Algorithm

4) Synchronous Iteration

5) Replicated Workers

6) Pipe lined Computation

These are the main reasons of Performance Degradation:

1) Memory contention

2) Excessive sequential code

3) Process creation time

4) Communication delay

5) Synchronization delay

6) Load Imbalance

<description of the Parallel Programming>

<description of the Performance Degradation>

While creating the processes, you should be careful that the process creation has its own cost, to the amount of work that you provide a process should be enough to overcome this cost if you want any gain in time. One way is to group the amount of work and provide to each processes.

Amdahal's Law

where f = sequential portion of the code (sequential portion/total code)

p = number of processors

Here, f is always less then 1(factor). So the speedup increases if f decreases. When f=0, the speedup depends on p only, ie if you increase the number of processors to infinity, you could gain infinite speedup. The sequential portion of the code always limits the speedup you could achieve by addition of more processors. for e.g. if f=0.05, the max speedup regardless of how many processors you add in your system, will be less then 20. ie. you could only increase your execution speed up to 20 times the sequential speed.

While the concept of Programming began with sequential programming, after the invention of multiple cores and multitasking, the programming is no more sequential. We have to take into consideration lots of other things to make a program work properly in a parallel way to utilize the processors available to the program.

There are three types of Multi-Processor Architecture:

1) Multi-Processor computer organization with common bus.

2) Shared Memory with Multiple Modules

3) Multiple Computer Organizations

One thing to note is that, some algorithms which might be best for sequential processing may be in-efficient in the parallel programming, or there may be more efficient algorithm for parallel processing which might be not so efficient in sequential processing. For e.g. We can sort an array much faster with Rank sort in parallel system, but its not so efficient in sequential processing. The best algorithm for sequential sorting is quick sort.

Writing a parallel program, you must be able to think about the different processes, local variables and global variables and the stream variables,

There are different kinds of Parallel Programming

1) Data Parallelism

2) Data Partitioning

3) Relaxed Algorithm

4) Synchronous Iteration

5) Replicated Workers

6) Pipe lined Computation

These are the main reasons of Performance Degradation:

1) Memory contention

2) Excessive sequential code

3) Process creation time

4) Communication delay

5) Synchronization delay

6) Load Imbalance

<description of the Parallel Programming>

<description of the Performance Degradation>

While creating the processes, you should be careful that the process creation has its own cost, to the amount of work that you provide a process should be enough to overcome this cost if you want any gain in time. One way is to group the amount of work and provide to each processes.

Amdahal's Law

Maximum speedup = 1/(f + (1-f)/p)

where f = sequential portion of the code (sequential portion/total code)

p = number of processors

Here, f is always less then 1(factor). So the speedup increases if f decreases. When f=0, the speedup depends on p only, ie if you increase the number of processors to infinity, you could gain infinite speedup. The sequential portion of the code always limits the speedup you could achieve by addition of more processors. for e.g. if f=0.05, the max speedup regardless of how many processors you add in your system, will be less then 20. ie. you could only increase your execution speed up to 20 times the sequential speed.